Now, for the third and last part of this series (first and second parts can be found here and here) about encryption. I've promised before that I would supply example source code for previously mentioned encryption operations. That's what this post is all about. The source below shows how to perform each of these operations with a handful of source code lines. The only thing covered here that I haven't mentioned before is how to derive algorithm keys (which are arrays of bytes) from a string, typically a password or a pass-phrase. That's covered in the first few lines and you should pay special attention to it, because it is something you'll need plenty of times.

Hope you've enjoyed this cryptography sessions.

12.10.2010

12.08.2010

Software Building vs Software Maintenance

I've been reading "The Mythical Man-Month" in the last few days and I'ld like to highlight here a particular paragraph: Systems program building is an entropy-decreasing process, hence inherently metastable. Program maintenance is an entroypy-increasing process, and even its most skillful execution only delays the subsidence of the system into unfixable obsolescence.So, for all of us working on program maintenance this means that even if from times to times we introduce new features into the system, we should keep in mind that someday (maybe not so far into the future as we would like to believe) the system we're working on will be deamed obsolete and eventually replaced! Isn't it so much better to design and implement brand new systems? Maybe that's why we're so eager to refactor existing sub-systems and sometimes even throw them way and build them from scratch... By the way, this is one of those books that should be regarded as a "must-read" for all software engineers/architects! |

12.01.2010

.NET Encryption - Part 2

In the first article of this series I’ve briefly covered some topics about .NET encryption. Now that we’ve all remembered how encryption works, let’s move onwards to the real deal.

Let’s start by seeing what .NET provides us with. In the table below I’ve grouped every algorithm (please let me know if I’ve missing any!) and categorized them by purpose (symmetric encryption, asymmetric encryption, non-keyed hashing and keyed hashing) and implementation.

There are three kinds of implementations:

In the table above, I’ve highlighted in red a few classes which were only introduced in .NET 3.5. However these new classes (except AesManaged) can only be used on Windows Vista and later operating systems. This is due to the fact that the CNG API was first released along with Windows Vista.

Please note that .NET framework supports only a few of the CNG features. If you wish to use CNG more extensively in .NET you may be interested in delving into the CLR Security Library.

So, the first big question is: with so many flavors what should we choose? Of course there’s no absolute and definitive response, there are too many factors involved, but we can start by pointing some of the pros and cons of each kind of implementation.

CNG: It has the downside of only running on the latest Operating Systems; On the upside it is the newer API (you should face CAPI as the deprecated API), it’s FIPS-Certified and it’s native code (hence likely to be faster than the Managed implementation).

Managed: It has the downside of not being FIPS-Certified and likely to be slower than the native implementations; On the upside this approach has increased portability has it works across all platforms (and apart from AesManaged you don’t even need the latest .NET version)

CSP: CryptoServiceProviders supply you a bunch of FIPS-Certified algorithms and even allows you to use cryptography hardware devices. Note that .NET support for Crypto Service Providers is a wrapper for the CAPI features and doesn’t all of CAPI features.

You may ask “What’s FIPS-Certified?”. FIPS (Federal Information Processing Standards) are a set of security guidelines which are demanded by several federal institutions and governments. Your system can be configured to allow only the use of FIPS-Certified algorithms. When faced with such a requirement, using a non FIPS-Certified algorithm is considered the same as using no encryption at all!

So, now that you know how to choose among the different kinds of implementations, another (perhaps more relevant and important) question, is how to choose the algorithm to use. It mostly depends upon the encryption strategy you are using.

Let’s start by seeing what .NET provides us with. In the table below I’ve grouped every algorithm (please let me know if I’ve missing any!) and categorized them by purpose (symmetric encryption, asymmetric encryption, non-keyed hashing and keyed hashing) and implementation.

There are three kinds of implementations:

- Managed: pure .NET implementations

- CryptoServiceProvider: managed wrappers to the Microsoft Crypto API native code implementations

- CNG: managed wrappers to the Next Generation Cryptography API designed to replace the previously mentioned CryptoAPI (also known as CAPI)

In the table above, I’ve highlighted in red a few classes which were only introduced in .NET 3.5. However these new classes (except AesManaged) can only be used on Windows Vista and later operating systems. This is due to the fact that the CNG API was first released along with Windows Vista.

Please note that .NET framework supports only a few of the CNG features. If you wish to use CNG more extensively in .NET you may be interested in delving into the CLR Security Library.

So, the first big question is: with so many flavors what should we choose? Of course there’s no absolute and definitive response, there are too many factors involved, but we can start by pointing some of the pros and cons of each kind of implementation.

CNG: It has the downside of only running on the latest Operating Systems; On the upside it is the newer API (you should face CAPI as the deprecated API), it’s FIPS-Certified and it’s native code (hence likely to be faster than the Managed implementation).

Managed: It has the downside of not being FIPS-Certified and likely to be slower than the native implementations; On the upside this approach has increased portability has it works across all platforms (and apart from AesManaged you don’t even need the latest .NET version)

CSP: CryptoServiceProviders supply you a bunch of FIPS-Certified algorithms and even allows you to use cryptography hardware devices. Note that .NET support for Crypto Service Providers is a wrapper for the CAPI features and doesn’t all of CAPI features.

You may ask “What’s FIPS-Certified?”. FIPS (Federal Information Processing Standards) are a set of security guidelines which are demanded by several federal institutions and governments. Your system can be configured to allow only the use of FIPS-Certified algorithms. When faced with such a requirement, using a non FIPS-Certified algorithm is considered the same as using no encryption at all!

So, now that you know how to choose among the different kinds of implementations, another (perhaps more relevant and important) question, is how to choose the algorithm to use. It mostly depends upon the encryption strategy you are using.

- For a symmetric algorithm, Rijndael is mostly recommended. AES is no more than a Rijndael implementation with fixed block and key sizes.

- For asymmetric algorithms, RSA is the common option.

- For hashing purposes, SHA2 algorithms (SHA256, SHA384, SHA512) are recommended. MD5 is considered to have several flaws and is considered insecure. SHA1 has also been recently considered insecure.

Published by

Unknown

at

9:59 da tarde

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET,

encryption

11.16.2010

.NET Encryption - Part 1

In this series, I’ll target .NET encryption. These will be a series of articles to avoid extensive blog posts.

I want to make a kind of a personal bookmark for whenever I need to use it. Heck, that’s one of the reasons most of us keep technical blogs, right?

Encryption is one of those things we don’t tend to use on a daily basis, so it’s nice to have this kind of info stored somewhere to help our memory!

In this Part 1, let’s start by remembering a few concepts:

Symmetric encryption

In this method, a single key is used to encrypt and decrypt data, hence, both the sender and the receiver need to use the exact same key.

Pros:

- Faster than asymmetric encryption

- Consumes less computer resources

- Simpler to implement

Cons:

- The shared key must be exchanged between both parties, that itself poses a security risk. If the key exchange must not be compromised!

Asymmetric encryption

This method uses two keys: the private key and the public key. The public key is publicly available for everyone who wishes to send encrypted messages. These encrypted messages can only be decrypted by the private key. This provides a scenario where everyone can send encrypted messages, but only the receiver bearing the private key is able to decrypt the received message.

Pros:

- Safer, because there’s no need to exchange any secret key between the parties involved in the communication

Cons:

- Slower than symmetric encryption

- Bad performance for large sets of data

- Requires a Key management system to handle all saved keys

Hashing

Safer, because Hashing isn’t encryption per-se, but it’s typically associated with it. Hashing is a mechanism that given an input data generates a hash value from which the origin data cannot be deduced. Typically a small change in the origin message can produce a completely different hash value. Hash values are typically used to validate that some data hasn’t been tampered with. Also when sensitive data (like a password) needs to be saved but its value is never to be read (only validated against), the hash can be saved instead of the data.

That's all folks! In the next part(s) I'll cover the encryption algorithms available in .NET, how to choose among them, some real-life scenarios and source code examples.

11.04.2010

Silverlight Strategy Tweaked to handle HTML5

Recently, there has been quite some buzz about Silverlight and HTML5. Microsoft has stated over and over they are supporting and investing a lot in HTML5. Have you heard the PDC keynote? If you heard it, then you’ve surely noticed the emphasis Ballmer placed on HTML5! Where was Silverlight in that keynote? Yes, I know PDC’s SilverLight focus was all about WP7, but what about all the other platforms?

So where does that leave Silverlight?

- Are they dropping the investment in SL?

- Does it still make sense to support both platforms knowing that their targets and objectives are slightly different?

- Will SL make sense only for smaller devices as WP7?

- Is SL going to be the future WPF Client Profile (as we have nowadays lighter .NET versions called “Client Profile”) and the gap between WPF and SL continuously reduced until only WPF exists? Will it be named “WPFLight”?

- Can HTML5 completely replace SL? Does it make sense to build complex UI applications in plain HTML5? Can/Should I build something like CRM Dynamics in HTML5?

- Is it the best alternative if you want to invest in developing cloud applications?

Much has been written about this subject, there are many opinions and mostly many unanswered questions. These are just a few of the questions I’ve heard and read in the last few weeks/months. These are hardly questions that just popped out of my mind… Let’s call them cloud questions, they are all over the web!

What I would like to point out is that Microsoft is aware of this and in response they’ve published in the Silverlight team blog (http://team.silverlight.net), they’ve published an announcement talking about changes in the Silverlight strategy (http://team.silverlight.net/announcement/pdc-and-silverlight). And I would like to quote the last part of it:

So, what they are saying here is:

- We acknowledge that HTML5 is the best cross-platform technology for the web

- We think there’s still some room for SilverLight, namely complex user interface client apps

And finally: “we’ll continuing investing in it!”. The question that might pop in your mind is “will they? Really? A long-term investment? Or a rather short one?”

What do I think? I think there’s still room for SL applications and I’m looking forward to see the developments and how SL will continue to reduce the gap to the full WPF framework.

So where does that leave Silverlight?

- Are they dropping the investment in SL?

- Does it still make sense to support both platforms knowing that their targets and objectives are slightly different?

- Will SL make sense only for smaller devices as WP7?

- Is SL going to be the future WPF Client Profile (as we have nowadays lighter .NET versions called “Client Profile”) and the gap between WPF and SL continuously reduced until only WPF exists? Will it be named “WPFLight”?

- Can HTML5 completely replace SL? Does it make sense to build complex UI applications in plain HTML5? Can/Should I build something like CRM Dynamics in HTML5?

- Is it the best alternative if you want to invest in developing cloud applications?

Much has been written about this subject, there are many opinions and mostly many unanswered questions. These are just a few of the questions I’ve heard and read in the last few weeks/months. These are hardly questions that just popped out of my mind… Let’s call them cloud questions, they are all over the web!

What I would like to point out is that Microsoft is aware of this and in response they’ve published in the Silverlight team blog (http://team.silverlight.net), they’ve published an announcement talking about changes in the Silverlight strategy (http://team.silverlight.net/announcement/pdc-and-silverlight). And I would like to quote the last part of it:

We think HTML will provide the broadest, cross-platform reach across all these devices. At Microsoft, we’re committed to building the world’s best implementation of HTML 5 for devices running Windows, and at the PDC, we showed the great progress we’re making on this with IE 9.

The purpose of Silverlight has never been to replace HTML, but rather to do the things that HTML (and other technologies) can’t, and to do so in a way that’s easy for developers to use. Silverlight enables great client app and media experiences. It’s now installed on two-thirds of the world’s computers, and more than 600,000 developers currently build software using it. Make no mistake; we’ll continue to invest in Silverlight and enable developers to build great apps and experiences with it in the future

So, what they are saying here is:

- We acknowledge that HTML5 is the best cross-platform technology for the web

- We think there’s still some room for SilverLight, namely complex user interface client apps

And finally: “we’ll continuing investing in it!”. The question that might pop in your mind is “will they? Really? A long-term investment? Or a rather short one?”

What do I think? I think there’s still room for SL applications and I’m looking forward to see the developments and how SL will continue to reduce the gap to the full WPF framework.

Published by

Unknown

at

1:51 da manhã

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

HTML5,

SilverLight,

WPF

9.20.2010

Memory Dump to the Rescue

Surely you’ve been through those situations where a user or a tester reports a situation that you can’t reproduce in your development environment. Even if your environment is properly set up, some bugs (such as a Heisenbug) can be very difficult to reproduce. Sometimes you even have to diagnose the program in the testing/production environment! Those are the times you wish you had a dump of the process state when the bug occurred. Heck, you’ve even read recently that Visual Studio 2010 allows you to open memory dumps, so you don’t even have to deal with tools like windbg, cdb or ntsd!

Wouldn’t it be great if you could instruct your program to generate a minidump when it stumbles upon a catastrophic failure?

Microsoft supplies a Debug Help Library which among other features, allows you to write these memory dumps. The code below is a .NET wrapper to this particular feature, which allows you to easily create a memory dump.

Note however that the target assembly must be compiled against .NET 4.0, otherwise visual studio will only be able to do native debugging.

Having the code to write a dump, let’s create an error situation, to trigger the memory dump creation. Let’s just create a small program that try to make a division by zero.

Running this program will throw an obvious DivideByZeroException, which will be caught by the try catch block and will be handled by generating a memory dump.

Let’s go through the process of opening this memory dump and opening it inside Visual Studio 2010. When you open a dump file in VS, it will present a Summary page with some data about the system where the dump was generated, the loaded modules and the exception information.

Note that this summary has a panel on the right that allows you to start debugging. We’ll press the “Debug with Mixed” action to start debugging.

Starting the debug of a memory dump will start debugger with the exception that triggered the dump, thus you’ll get the following exception dialog:

After you press the “Break” button, you’ll be shown the source code as shown below. Note the tooltip with the watch expression “$exception” (you can use this expression in the immediate or the watch window whenever you want to check the value of the thrown exception, assuming somewhere up in the stack you are running code inside a catch block) where you can see the divide by zero exception that triggered the memory dump.

You can also see the call stack navigate through it to the Main method where the exception was caught. All the local and global variables are available for inspection in the “Locals” and “Watch” tool windows. You can use this to help in your bug diagnosis.

The approach explained above automatically writes a memory dump when such an error occurs, however there are times when you are required to do post-mortem debugging in applications that don’t automatically do this memory dumping for you. In such situations you have to resort to other approaches of generating memory dumps.

One of the most common approaches is using the debugger windbg to achieve this. Let’s see how we could have generated a similar memory dump for this application. If we comment the try catch block and leave only the division by zero code in our application, it will throw the exception and immediately close the application. Using windbg to capture such an exception and create a memory dump of it is as simple as opening windbg, selecting the executable we’ll be running:

This will start the application and break immediately (this behaviour is useful if we want to set any breakpoints in our code). We’ll order the application to continue by entering the command “g” (you could also select “Go” from the “Debug” menu).

This will execute until the division by zero throws the exception below. When this happens, we’ll order windbg to produce our memory dump with the command ‘.dump /mf “C:\dump.dmp”’.

After this your memory dump is produced in the indicated path and you can open it in visual studio as we’ve previously done.

Post-mortem is a valuable resource to debug some complex situations. This kind of debugging is now supported by Visual Studio, making it a lot easier, even though for some trickier situations you’ll still need to resort to the power of native debuggers and extensions like SOS.

9.16.2010

Code Access Security Cheat Sheet

Here’s one thing most developers like: Cheat Sheets!

I’ve made a simple cheat sheet about .NET Code Access Security, more specifically about the declarative and imperative way of dealing with permissions.

Bear in mind that this cheat sheet doesn’t cover any of the new features brought by .NET 4.0 security model.

This cheat sheet may be handy for someone who doesn’t use these features often and tend to forget how to use it or for someone studying for the 70-536 exam.

Published by

Unknown

at

5:27 da tarde

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET,

CAS,

Cheat Sheet,

Security

9.07.2010

Viewing __ComObject with a Dynamic View

If you ever had to debug a .NET application that uses COM objects, you've probably added such an object to the Watch window or tryied to inspect it in the Immediate window, just to find out it was a __COMObject type which wouldn't give you any hint about it's members.

Visual Studio 2010 gives you a solution for this problem. They've introduced a feature called Dynamic View. As long as your project is using the .NET 4.0 framework you can now inspect these elements.

Let's take a look at this code:

Visual Studio 2010 gives you a solution for this problem. They've introduced a feature called Dynamic View. As long as your project is using the .NET 4.0 framework you can now inspect these elements.

Let's take a look at this code:

This code is creating a Visual Studio instance and instructing the debugger to break execution right after the creation so that we can inspect the __ComObject.

There are two ways of doing this:

- adding "vsObj" to the watch window and expanding "Dynamic View" node

- adding "vsObj, dynamic" to the watch window

Also note that if your object contains other __COMObjects you can inspect them in the same way as show here in the "AddIns" property.

This "Dynamic View" feature was developed mainly to inspect dynamic objects (another new feature from .NET 4.0). That's where its name comes from.

9.06.2010

RIP : Object Test Bench

After spending some time looking for Object Test Bench in VS2010 menus, I've decided to do a quick search and found this VS2010 RIP list where Habib supplies a partial list of the features removed in VS2010. I was surprised to find out they have removed Object Test Bench!

It's not as if I really needed it, there's nothing I would do with it that I can't do with Immediate window, but I did use it from time to time for some lightweight testing. I guess I won't be using it anymore, will I?

But maybe you'll be more surprised to find out they also removed intellisense for C++/CLI!!!

It's not as if I really needed it, there's nothing I would do with it that I can't do with Immediate window, but I did use it from time to time for some lightweight testing. I guess I won't be using it anymore, will I?

But maybe you'll be more surprised to find out they also removed intellisense for C++/CLI!!!

Published by

Unknown

at

5:53 da tarde

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

VS2010

9.03.2010

Refactoring with NDepend

I’ve recently been granted an NDepend Professional License, so I’ve decided to write a review about it. I took this opportunity to refactor one application I’ve been working on, which suddenly grew out of control and needed a major refactor.

Note: This article is mainly focused on NDepend, a tool that supplies great code metrics in a configurable way through it’s Code Query Language. If you don’t know this tool, jump to http://www.ndepend.com/ and find out more about it.

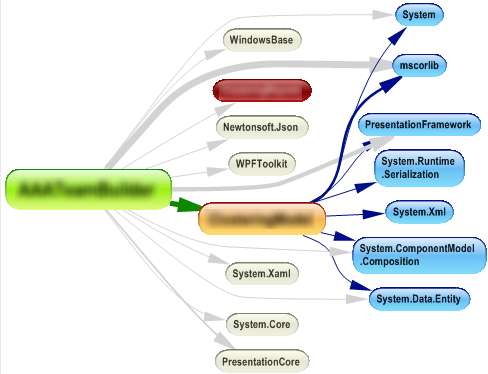

This application grown out of control pretty quickly and one of the things I wished to do in this refactoring is split the assembly in two or three logical assemblies. For this, I’ve decided to use ndepend’s dependency matrix and dependency graph to evaluate where was the best place to split the assemblies. I validated the number of connections I would have between the assemblies to split. For a handful of classes I decided some refactoring was needed in order to reduce the number of dependencies between assemblies. Refactoring these classes allowed me to reduce coupling between the assemblies while increasing the assemblies relational cohesion (the relations between the assembly types) which was lower than it should. There is a metric for this relational cohesion which I used to evaluate how far I should go.

|

| Dependency Graph of all the assemblies envolved (I've blurred the names of my assemblies for obvious reasons) |

Further inspection of the dependency matrix led me to the conclusion that my model-view-controller was being violated in a few places, so I added a TODO comment in those places. I’ll fix that after this major refactoring is done to avoid too many refactorings at once (remember that one of the thumb rules about refactoring is doing it in baby steps to avoid the introduction of errors in a previously working codebase).

Next it was time for some fine-grained refactorings. This is where the NDepend code metrics were most valuable. I don’t want to go into too much detail here, so I’ll just pick a few of the metrics I used and talk about them.

First metrics I decided to look were the typical metrics that target code maintainability. Metrics such as “Cyclomatic Complexity” and “Number of lines of code”. No big surprises here. I found two methods that tend to show up in the queries, but these are methods that contain complex algorithm implementations which aren’t easy to change in a way that would improve this statistics. These are some important metrics, so I tend to check them first.

Onward to another interesting metrics...

Efferent coupling

This metric told me something I had forgotten: I suck at designing user interfaces! I tend to crumple a window with a bunch of controls, instead of creating separate user controls where appropriate. Of course that generally produces a class (the window) with too many responsibilities and too much code (event handling/wiring). Also the automatically generated code-behind for these classes tends to show up repeatedly across several code metrics.

Dead code metrics

These allow you to clean dead code that’s rotting right in the middle of your code base, polluting the surrounding code and increasing complexity and maintainability costs. Beware however that NDepend only marks *Potentially* dead code. Say that you are overriding a method that’s only called from a base class from a referenced assembly: since you have no calls to that method and the source to the base class where the method is called is absent from the analysis, that will lead NDepend to conclude that the method is potentially dead code, it doesn’t mean however that you are free to delete it. Also remember a method can be called through reflection (which pretty much makes it impossible for a static analysis tool to say your method is definitely dead code), so think twice before deleting a method, but think! If it’s dead code, it’s got to go!

Naming policy metrics

Here I started by changing some of the rules to fit my needs. Example, naming convention constraints define that instance fields should be prefixed by “m_”, while I tend to use “_”. These are the kind of metrics that are useful to do some code cleanup and make sure you keep a consistent code styling. NDepend pre-defined naming conventions may need to be customized to suit the code style you and your co-workers opted for. As good as these naming conventions rules can be, they’re still far from what you can achieve with other tools suited specifically for this. If you’re interested in enforcing a code style among your projects I would suggest opt for alternatives like StyleCop, however for lightweight naming convention NDepend might just suit your needs.

Note: Don’t get me wrong, I’m not saying that my convention is better or worse than the one NDepend uses. When it comes to conventions I believe that what’s most important is to have one convention! Whether the convention specifies that instance fields are prefixed by “m_” or “_” or something else is another completely different discussion…

A Nice Feature not to be overlooked

I know the hassle of introducing these kind of tools on a pre-existant large code base. You just get swamped in warnings which in turn leads to ignoring any warning that may arise in the old or in the code you develop from now on. Once again NDepend metrics come to the rescue, meet “CodeQuality From Now!”. This is a small group of metrics that applies to the new code you create, so this is the one you should pay attention if you don’t want to fix all your code immediately. Improving the code over time is most of the times the only possible decision (we can’t stop a project for a week and just do cleanup and refactorings, right?) so while you’re in that transition period make sure you keep a close eye on that “CodeQuality From Now!” metrics, because you don’t want to introduce further complexity.

Visual NDepend vs NDepend Visual Studio addin

I also would like to make a brief comment about Visual Studio integration and how I tend to use NDepend.

While having NDepend embed in visual studio is fantastic I found that I regularly go back to Visual NDepend whenever I want to investigate further. I would say that I tend to use it inside Visual Studio whenever I want to do some quick inspection or to consider the impacts of a possible refactoring. Anything more complex I tend to go back to Visual NDepend mainly because having an entire window focused on the issue I’m tracking is better than having some small docked panel in visual studio (Wish I had a dual monitor for visual studio!)

Feature requests

I do have a feature request. Just one! I would like to be able to exclude types/members/etc from analysis, and I would like to do it in the NDepend project properties area. A treeview with checkboxes for all members we want to include/exclude from the process would be great. I’m thinking in the treeview used in some obfuscator tools (eg: Xenocode Obfuscator) that contain the same kind of tree to select which types/members should be obfuscated.

This is useful for automatically generated code. I know that the existing CQL rules can be edited in a way to exclude auto-generated code, but it’s a bit of a hassle to handle all that CQL queries. Another alternative would be to “pollute” my code with some NDepend attributes to mark that the code is automatically generated, but I don’t really want to redistribute my code along with NDepend redistributable library.

Also, my project has a reference which is set to embed interop types in the assembly (a new .NET feature) and currently there is no way (at least that I know of) to exclude these types from analysis.

Conclusions

Ndepend will:

- make you a better developer

- enforce some best practices we tend to forget

- improve your code style and most important code maintainability

There are many other interesting NDepend metrics and features beside metrics I didn’t mention. I picked just a bunch of features I like and use, I could have picked some others, but I think this is already too long. Maybe some other time!

Also note that NDepend is useful as an everyday tool, not just for when you're doing refactorings as I've exemplified.

If you think I’ve missed something important go ahead and throw in a comment.

8.26.2010

WSDL Flattener

If you ever developed a webservice in WCF, you probably already know that WCF automatically generates WSDL documents by splitting it in several files according to their namespaces and using imports to link the files. This is all very nice and standards compliant as it should be, the problem is that there are still many tools out there that don't support this. If you ever had to deal with this issue before you probably used Christian Weyer's blog post as a solution to make WCF output single file WSDL documents.

A few days ago I had a similar problem, I didn't want to change the way WCF produces WSDL, but I did want a single file WSDL for documentation purposes. I searched for a tool to do this, but I didn't find any, so I did what a good developer should do, I developed a small tool for this. I'm also making it available under an open source license, so if you ever need it you can find it here: http://wsdlflattener.codeplex.com/

A few days ago I had a similar problem, I didn't want to change the way WCF produces WSDL, but I did want a single file WSDL for documentation purposes. I searched for a tool to do this, but I didn't find any, so I did what a good developer should do, I developed a small tool for this. I'm also making it available under an open source license, so if you ever need it you can find it here: http://wsdlflattener.codeplex.com/

Published by

Unknown

at

3:22 da tarde

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET,

WCF,

WebServices,

WSDL

6.22.2010

Object Test Bench Overview

Today I'ld like to talk a bit about the Object Test Bench (hereforth named OTB for the sake of simplicity). It seems to be one of most underrated visual studio feature. Most developers I know have never used it or used it only once.

Microsoft states that the OTB purpose is:

OTB operations are acessible from the "Class View" and the "Class Designer". Most of the times we don't have a class diagram to interact with the classes, so I end up using Class View most of the times.

Here's a practical example. Say you have 3 classes: Message, MessageProcessor and ProcessedData. The MessageProcessor handles a Message instance to create a ProcessedData instance. If you suspect the MessageProcessor as a bug and wish to debug it, you just need to create a Message instance, a MessageProcessor instance and invoke the method that handlers the Message. Depending on what you wish to debug, you may just inspect the output (a ProcessedData instance) or you may set a few breakpoints in the MessageProcessor class to better diagnose what's happening. Here's a few screenshots that show how this can be done in the OTB.

There are some caveats that stop some users from using OTB at all. One of the most anoying is that the project whose classes you want to test in the OTB have to be set as the start up project of the solution. I find myself setting the project I want to test as the start up project (even though sometimes it's a class library) just so that I can use the OTB. Despite these caveats, OTB is overall a nice feature.

Microsoft states that the OTB purpose is:

- Teaching object-oriented programming concepts without going into language syntax

- Providing a lightweight testing tool designed for academic and hobbyist programmers to use on small and simple projects

- Shortening the write-debug-rewrite loop

- Testing simple classes and their methods

- Discovering the behavior of a library API quickly

OTB operations are acessible from the "Class View" and the "Class Designer". Most of the times we don't have a class diagram to interact with the classes, so I end up using Class View most of the times.

Here's a practical example. Say you have 3 classes: Message, MessageProcessor and ProcessedData. The MessageProcessor handles a Message instance to create a ProcessedData instance. If you suspect the MessageProcessor as a bug and wish to debug it, you just need to create a Message instance, a MessageProcessor instance and invoke the method that handlers the Message. Depending on what you wish to debug, you may just inspect the output (a ProcessedData instance) or you may set a few breakpoints in the MessageProcessor class to better diagnose what's happening. Here's a few screenshots that show how this can be done in the OTB.

|

| The OTB after creating a Message and a MessageProcessor instance. Invoking the ProcessMessage method in the MessageProcessor instance |

|

| When the method has a return value you're allowed to save and name the object |

|

| The OTB allows you to inspect these objects by hovering |

|

| You can also inspect/use the OTB locals in the Immediate window by using the name you've previously specified |

6.19.2010

Intellitrace not collecting data

If you've been using Visual Studio 2010 you're probably enjoying the fantastic Intellitrace feature. It's truly great and if you don't know it yet, it surely deserves spending some time investigating it.

Recently, IntelliTrace stopped working for one of my pet projects. So instead of showing gathered data it shows the message "IntelliTrace is not collecting data for this debugging session."

|

| Intellitrace is not collecting data for this debugging session |

The entire message (shown above) hints that there can be several reasons for this issue. However this message wasn't useful in my situation.

I've discovered that there's at least another situation where IntelliTrace stops working without any apparent reason.

Apparently, you can't have the SQL server debugger enabled. So, if you ever stumble upon this problem, just open the project properties and under the "Debug" tab make sure that the checkbox "Enable SQL Server debugging" is disabled!

|

| SQL Server debugging option that needs to be disabled for IntelliTrace to work |

3.15.2010

Reflecting Type Equivalence

Today, I want to write about another of the new features in .NET 4: Type Equivalence and Embedded Interop Types. This feature allows you to embed type references to interop types in your assembly. In the end, this allows you to avoid deploying interop assemblies.

Anyway, I don't want to go into much detail about this. You can read more about it here Type Equivalence and Embedded Interop Types and here Walkthrough: Embedding Types from Managed Assemblies

What I want to do here is show you how the types get placed in the assembly. This is pretty much just a quick look with reflector in an assembly with embedded COM types.

So here's what I've done to test this:

I've created a simple project, added a reference to MSXML, then in the added reference properties, I've made sure the property "Embed Interop Types" was set to "True" (This is what defines if a reference is to be embedded).

Then I've inserted this small code snippet to use this library:

Note that in the code above I have a comment with the code that I would regular use to create an instance of the DOMDocument class. However you can't use code like this if you wish to use type equivalence. Classes aren't embedded, interfaces are. If you try to use that line of code you'll get the compilation error below.

Now, let's finally look at the compiled assembly with the .NET developer's favorite tool: Reflector.

As you can see above, a small part of the MSXML2 Namespace as been included in our brand new assembly. Note that only the referenced interfaces (along with its own dependencies) by our code were included in the assembly, so this means that not only you avoid redistributing an interop, but you also end up reducing the size of your application dependencies!

3.11.2010

WPF Treeview bound to an xml file monitored for changes

Here's a code snippet of a WPF treeview binding to a xml file, which gets reloaded everytime the xml file is physically changed.

The sample xml file I'm using (located at "C:\data.xml"):

The XAML code:

And finally the C# code-behind code for the same window that monitors the xml file changes and updates the binding accordingly:

The sample xml file I'm using (located at "C:\data.xml"):

The XAML code:

And finally the C# code-behind code for the same window that monitors the xml file changes and updates the binding accordingly:

Published by

Unknown

at

12:54 da manhã

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET,

C#,

Code Snippet,

WPF,

XML

3.09.2010

Integrating MEF with a K-means algorithm implementation

When we're interested about a new framework and we want to try it out we usually start by creating a small and simple demo application. Afterwards we start thinking in real-life situations where we could use it. Here, I'll describe my first real-life use of MEF.

I have a small application with a custom implementation of the K-means clustering algorithm. Common implementations of this algorithm attempt to find clusters of points. The cluster points are related by the distance between themselves. In my custom implementation, the points are persons and the "distance" is in fact computed by evaluating a series of constraints.

Each of these constraints is a distinct class, implementing an IConstraint interface.

Until know I had the following initialization code for constraints:

Then I had the following code to evaluate the constraints:

Note: In here the "distance" between persons is what I call "concordance rate". The bigger the concordance rate, the "closer" the member is to the cluster.

A few days ago, I had to create a new constraint and when I saw that initialization code and "TODO" comment I decided it was time to change it. After careful thought, I decided MEF was the correct choice. With MEF, I managed to replace this tedious initialization code while reducing the coupling between the concrete constraints and the constraint evaluator.

I've just opened all the Constraint classes and added the attribute [Export(typeof(IConstraint))] like this:

Then I've instructed the ConstraintList property to import all the IConstraints. This is done by marking the property with the attribute [ImportMany(typeof(IConstraint))]. Like this:

Last but not least, I had to instruct the class to compose the constraint list. Like this:

Note that I'm using the using an AssemblyCatalog with the executing assembly on purpose, because I want to load the constraints from the current assembly. If I were to move all the Constraints to another assembly (eg: a separate "Contracts" or "Constraints" assembly) this code would be different.

There you go, just a simple refactoring that allowed me to reduce coupling and remove a TODO comment from my code! And I got to use MEF in a real scenario.

Published by

Unknown

at

7:59 da tarde

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET4.0,

C#,

Case Study,

MEF

3.08.2010

SQLite "available" for .NET 4.0

If you're a fan of SQLite and you've already started to use VS2010, you've probably noticed that your ADO.NET provider for SQLite no longer works as it should. It's now throwing an exception when you try to load data.

I've had issues with this as well, and yesterday I went back to the forums to see if a solution had already been found. At first sight, it looked I was out of luck, because no new versions were available for download. However, after a better look in their forum, I've found this thread where an installer was supplied to install the provider in VS2010 Beta 2 and VS2010RC. So here's the quick link for download!

I'm guessing this will only be available for download along with the other versions when it is tested against the final VS2010, however, it seems to be working already, so if you're not developing something critical, I guess it's OK to start using it. If this was the only thing stopping you from using VS2010, you're good to go!

I've had issues with this as well, and yesterday I went back to the forums to see if a solution had already been found. At first sight, it looked I was out of luck, because no new versions were available for download. However, after a better look in their forum, I've found this thread where an installer was supplied to install the provider in VS2010 Beta 2 and VS2010RC. So here's the quick link for download!

I'm guessing this will only be available for download along with the other versions when it is tested against the final VS2010, however, it seems to be working already, so if you're not developing something critical, I guess it's OK to start using it. If this was the only thing stopping you from using VS2010, you're good to go!

3.03.2010

Changing and Rebuilding assemblies to update referenced assemblies

Problem

How to change and rebuild a .NET assembly without having/acessing it's source code just so you can update it's references. Either because you now wish to use a different version of the referenced assembly, or the reference's public key token has changed or because some class has changed namespaces.

The problem described above is definitely NOT one of the usual problemns we have to deal on a daily basis. So what motivated this need?

In this case, we're dealing with third party COM libraries. The problem is that the company developing these libraries has not yet released PIA for these libraries. So at some time we felt the urge to create our own normalized interops so that every developer uses the same interop. Otherwise we would have problems deploying all the applications using the different interops. In this process we've also decided to change the namespaces (for normalization purposes).

Recently I had the need to use an additional library (for which no normalized interop had been created). As usual I started by adding a reference to that COM library to my Visual Studio project. By adding a COM reference, Visual Studio automatically creates an interop assembly for us. However in this case, as this library references the other COM libraries previously mentioned, the generated interop wouldn't work, because itself had references to the other COM libraries using the original namespaces, which cannot be found in the other "normalized" interops we're using.

Hence the need to change this interop assembly so that it complies with the other normalized references.

Solution

Knowing that we don't have the source code, the only way of changing an assembly is disassemble it, change the IL code and reassemble it. Also, we'll also re-sign the assembly (which is definetly needed if you wish to install the assembly in the GAC (Global Assembly Cache). Here's the four steps to accomplish this task:

Step 1: Disassemble the assembly

For this first step you'll need to use MSIL Disassembler (also known as ildasm).

Just open a visual studio command prompt and run "ildasm". This will present you with the ildasm GUI. From the File menu, you should open the target assembly and choose "Dump" to create a text file with the assembly MSIL.

Alternatively, for a more direct approach, just run the command:

ildasm targetAssembly.dll /out=targetAssembly.il

Step 2: Change the IL accordingly

Now, it's where you really need to pay attention. This is the tricky part. Depending on what you're trying to achieve, you may have to change different stuff.

First, start by opening the newly generated text file on your favorite text editor.

The referenced assemblies should be among the first lines of the file. Each reference will look something like this:

.assembly extern mscorlib{

.publickeytoken = (B7 7A 5C 56 19 34 E0 89 ) // .z\V.4..

.ver 4:0:0:0

}

This of course is a reference to the main .NET DLL. Your references will have a different name (here it's "mscorlib"), a different public key token (herer it's "B7 7A 5C 56 19 34 E0 89"), and possibly a different version (here it's "4:0:0:0").

What you need to do next is look for the reference(s) you wish to change and update them accordingly. This can imply changing the name if you've changed the assembly name, changing the public key token if it has changed, or (most usually) the version if you want to bind against a different assembly version. Please note that the version in here is in the following format <major version>:<minor version>:<build number>:<revision>

Now, if you've changed either the assembly name or the assembly base namespace you'll have to do a few massive find and replaces. You'll have to change every type reference to the referenced assemblies you're targetting. Here's why: a simple call to Console.WriteLine, will show up in the MSIL as

IL_0011: call void [mscorlib]System.Console::WriteLine(string)

Look specifically at the "[mscorlib]System.Console" part. It's a reference to the type System.Console in the assembly "mscorlib". This means that type references to types in your target referenced assemblies will have a similar syntax, so if you've changed either the assembly name or the base namespace, you'll have to do a massive find and replace to align both dll's.

Notice that I said "base namespace", because if you do change several namespaces arbitrarly you may have multiplied the number of find and replaces to execute.

Step 3: Reassemble the assembly

Now that you've changed the MSIL to comply with the referenced assemblies, you'll have to reassemble your dll. For this you'll need to use MSIL Assembler (ilasm : the companion tool to the previously mentioned ildasm)

Once again open a visual studio command prompt and execute the command

ilasm /dll targetAssembly.ilThis command will produce your brand new targetAssembly.dll

Step 4: Sign the assembly

For this last step we'll use the strong name utility (SN.exe). Also, you'll need an SNK file to sign the assembly. You're probably signing all your assemblies with the same SNK, so you shouldn't have a problem finding it. However if you need to create a new SNK, the command to execute is: "sn -k strongNameKey.snk"

I hope you haven't closed the VS command prompt, because you'll need it to execute the following command to sign your brand new assembly:

sn -Ra targetAssembly.dll strongNameKey.snkLast but not least, to make sure you didn't forget to do anything, you should go fetch a nice cold beer. If you did forget anything, I'm sure it will come to you while you're drinking!

Published by

Unknown

at

12:12 da manhã

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET,

Case Study,

IL,

Interop

3.01.2010

Lazy Loading

Today, I'll discuss one simple class that comes bundled with .NET 4.0. It's one of those small features that don't get much attention, but that truly come in handy. In this case, I'm talking about System.Lazy<T>. It's a simple class that provides support for lazy loading, so we no longer need to do those small pieces of code we've been doing throughout the years, whenever we had to deal with resources whose creation revealed to be expensive.

It's a simple class with a simple purpose, therefore it has a very simple usage, which I'll show in the small snippet below.

The code above will produce the following output:

Before bird creation

Creating Bird

I am a Duck

Before eagle creation

Creating Eagle

I am a Eagle

Notice that the Bird class is only instantiated when "bird.Value" and "eagle.Value" are accessed.

In the example above, I've shown:

- the usage of Lazy<T> in a way that instantiation will be done through a call to the default constructor

- the usage of Lazy<T> with a delegate responsible for the creation itself. This delegate will of course only be called at instantiation time (when the Lazy<T> Value property is accessed)

There are also other Lazy<T> constructors that provide further options regarding thread safety, which I will not discuss here.

It's a simple class with a simple purpose, therefore it has a very simple usage, which I'll show in the small snippet below.

The code above will produce the following output:

Before bird creation

Creating Bird

I am a Duck

Before eagle creation

Creating Eagle

I am a Eagle

Notice that the Bird class is only instantiated when "bird.Value" and "eagle.Value" are accessed.

In the example above, I've shown:

- the usage of Lazy<T> in a way that instantiation will be done through a call to the default constructor

- the usage of Lazy<T> with a delegate responsible for the creation itself. This delegate will of course only be called at instantiation time (when the Lazy<T> Value property is accessed)

There are also other Lazy<T> constructors that provide further options regarding thread safety, which I will not discuss here.

Published by

Unknown

at

1:13 da manhã

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

.NET,

.NET4.0,

C#,

Lazy Loading

2.25.2010

PostSharp Goes Comercial

PostSharp Goes Comercial! It's a sad day. Don't get me wrong, I'm glad to see that the postsharp guys who have been doing a fantastic work are finally about to get rewarded. I'm just sad because I believe that the companies I work for are never going to buy a license just because I like to produce clean code with aspects. If there is another way of them not spending money on a license, I'm not expecting them to do it. So what if the produced code is cleaner, easier to maintain and I get to reduce coupling...

Ghost in the code

I've recently had an healthy discussion with two colleagues about a design decision, in which we defended two very different approaches.

The subject under discussion was the consumption of a resource (figure it as a database, even though it isn't one), which could in two different ways:

- through a mapping mechanism which binds an entity (call it a business object) to the resources at compile time

- bounded at runtime by name

Up until know, everyone has been using something similar to the second alternative: runtime bounding. What two of us were advocating was the urge to change this.

Why? With the current situation, if you decide to change a data structure, you are creating an uncertain and potentially gigantic universe of latent errors, because there's no documentation of these structures, which can be in use by several applications and no one really knows which applications use it. The approach we're defending here has the advantage of turning this "uncertain and potentially gigantic universe of latent errors" into a small universe of concrete errors (typically compilation errors).

One of the main advantages of this approach is when we do change a data structure (as in the example above), because by then it will be a mather of dealing with a few easily and quicky identifiable bugs which generally will have a quick fix. With the current situation, you'll have to deal with an unknown ammount of errors which may reveal themselves through an unexpected application behaviour, making it hard to track in source code, thus meaning that even if one finds how to reproduce the bug, he'll probably loose most of the time figuring where the bug is located in the source code. Furthermore, he might never be sure to have covered all the situations.

Besides, Murphy's Law will make sure these latent bugs arise at the worst possible time when you have to deliver a complex piece of software within a very very tight schedule and a deadline hanging above your head, thus leading to an hazardous situation!

This pretty much maps to a simplified discussion of static vs dynamic languages, although with some small and meaning differences. I believe languages are tools and as such, we should choose the right tool for each job. I'm not defending static or dynamic languages here, as I believe each of them have different purposes and none is the best tool for every situation.

Static VS Dynamic languages discussion has been around for years, and need not be discussed here. However, a few points are of interest for the discussion.

Most of the disavantages of dynamic languages can be attenuated through:

- Unit testing, because it reduces the need/importance of static type checking, by making sure the tested situations comply with the expected behaviour;

- Reduced coupling and higher cohesion, so that the impact of source code change is most likely in a single specific location and has no impact in further application modules;

However in this case, what we know and all agree with is that the current solution has a pathological coupling and coincidental cohesion, in that every functionality is potentialy scattered thorough several places, and you're never sure who's using which resource, how it's being used and wich purpose is being used for. Also, several thumb rules such as DRY (Don't repeat yourself) are repeteadly violated, so we know always to expect the worst from the implemented "Architecture".

Let's call it Chaos!

So in this case, when it comes to Safety vs Flexibility, I choose Safety! As the risks of choosing Flexibility are far greater in my humble opinion.

Pick your poison!

The subject under discussion was the consumption of a resource (figure it as a database, even though it isn't one), which could in two different ways:

- through a mapping mechanism which binds an entity (call it a business object) to the resources at compile time

- bounded at runtime by name

Up until know, everyone has been using something similar to the second alternative: runtime bounding. What two of us were advocating was the urge to change this.

Why? With the current situation, if you decide to change a data structure, you are creating an uncertain and potentially gigantic universe of latent errors, because there's no documentation of these structures, which can be in use by several applications and no one really knows which applications use it. The approach we're defending here has the advantage of turning this "uncertain and potentially gigantic universe of latent errors" into a small universe of concrete errors (typically compilation errors).

One of the main advantages of this approach is when we do change a data structure (as in the example above), because by then it will be a mather of dealing with a few easily and quicky identifiable bugs which generally will have a quick fix. With the current situation, you'll have to deal with an unknown ammount of errors which may reveal themselves through an unexpected application behaviour, making it hard to track in source code, thus meaning that even if one finds how to reproduce the bug, he'll probably loose most of the time figuring where the bug is located in the source code. Furthermore, he might never be sure to have covered all the situations.

Besides, Murphy's Law will make sure these latent bugs arise at the worst possible time when you have to deliver a complex piece of software within a very very tight schedule and a deadline hanging above your head, thus leading to an hazardous situation!

This pretty much maps to a simplified discussion of static vs dynamic languages, although with some small and meaning differences. I believe languages are tools and as such, we should choose the right tool for each job. I'm not defending static or dynamic languages here, as I believe each of them have different purposes and none is the best tool for every situation.

Static VS Dynamic languages discussion has been around for years, and need not be discussed here. However, a few points are of interest for the discussion.

Most of the disavantages of dynamic languages can be attenuated through:

- Unit testing, because it reduces the need/importance of static type checking, by making sure the tested situations comply with the expected behaviour;

- Reduced coupling and higher cohesion, so that the impact of source code change is most likely in a single specific location and has no impact in further application modules;

However in this case, what we know and all agree with is that the current solution has a pathological coupling and coincidental cohesion, in that every functionality is potentialy scattered thorough several places, and you're never sure who's using which resource, how it's being used and wich purpose is being used for. Also, several thumb rules such as DRY (Don't repeat yourself) are repeteadly violated, so we know always to expect the worst from the implemented "Architecture".

Let's call it Chaos!

So in this case, when it comes to Safety vs Flexibility, I choose Safety! As the risks of choosing Flexibility are far greater in my humble opinion.

Pick your poison!

Published by

Unknown

at

10:34 da manhã

0

comments

Enviar a mensagem por emailDê a sua opinião!Partilhar no XPartilhar no FacebookPartilhar no Pinterest

Tags:

Architecture,

Design,

Dynamic,

Static

2.17.2010

Quick and Dirty Logging

Ever felt the need to quickly implement logging in your application but you don't want to loose time learning/remembering how to use a true logging framework or creating your own utility logging class?

There are some developers who tend to bring their own utility classes in their backpack just in case they stumble upon one of these situations. Most of us however, don't keep track of the utility classes we've written throughout the years or tend to loose that code. So last time it happened to me, I've written this "Quick and Dirty Logging" class using a TraceListener.

Usage examples:

Implementation Details

Note that I've decided to break the debugger whenever I stumble with an error or warning as long as the assembly is compiled in debug mode. I made this decision because at the time I wanted to be able to fix the errors using the "Edit and Continue" visual studio feature thus shortening the debug-stop-rebuild-restart cycle and saving some time. This code is not supposed to be a production code, but that won't be a problem as long as you make sure you don't deploy debug assemblies anywhere other than a developer machine.

Also as we're using the System.Diagnostics.Trace features, make sure you have tracing enabled in your project (the TRACE compilation symbol needs to be defined), which is the default for visual studio C# projects.

Remember that there are logging frameworks far more customizable and faster than this small logging class. This isn't supposed to replace any other logging mechanism, it's just a quick solution empowered by its simplicity.

Conclusion

Next time I feel the urge to create a quick logging mechanism I'll try to remember this post and gather this code. But then again, when that happens, I'm pretty much sure Murphy's Law will make sure I won't get internet access. Probably the proxy will die on me while I'm opening the browser!

There are some developers who tend to bring their own utility classes in their backpack just in case they stumble upon one of these situations. Most of us however, don't keep track of the utility classes we've written throughout the years or tend to loose that code. So last time it happened to me, I've written this "Quick and Dirty Logging" class using a TraceListener.

Usage examples:

Implementation Details

Note that I've decided to break the debugger whenever I stumble with an error or warning as long as the assembly is compiled in debug mode. I made this decision because at the time I wanted to be able to fix the errors using the "Edit and Continue" visual studio feature thus shortening the debug-stop-rebuild-restart cycle and saving some time. This code is not supposed to be a production code, but that won't be a problem as long as you make sure you don't deploy debug assemblies anywhere other than a developer machine.

Also as we're using the System.Diagnostics.Trace features, make sure you have tracing enabled in your project (the TRACE compilation symbol needs to be defined), which is the default for visual studio C# projects.

Remember that there are logging frameworks far more customizable and faster than this small logging class. This isn't supposed to replace any other logging mechanism, it's just a quick solution empowered by its simplicity.

Conclusion

Next time I feel the urge to create a quick logging mechanism I'll try to remember this post and gather this code. But then again, when that happens, I'm pretty much sure Murphy's Law will make sure I won't get internet access. Probably the proxy will die on me while I'm opening the browser!

Subscrever:

Mensagens (Atom)